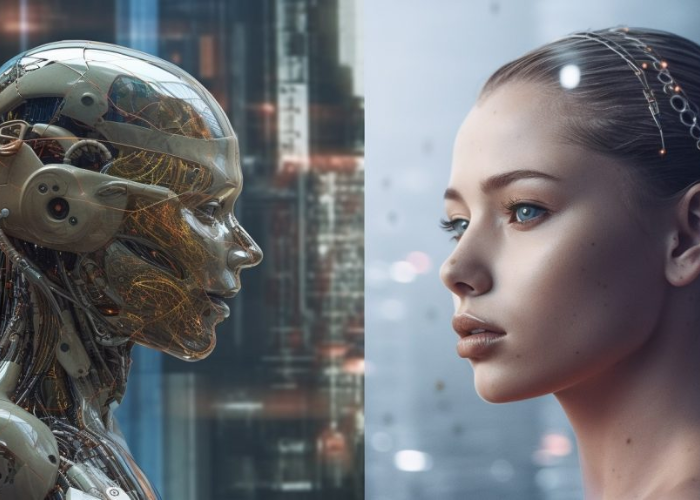

Artificial Intelligence (AI) has emerged as one of the most transformative and disruptive technologies of our time. It has the potential to reshape industries, revolutionize how we live and work, and solve complex problems. However, along with its promises, AI also brings a set of ethical dilemmas that demand our attention. One of the most pressing concerns in the realm of AI ethics is the moral landscape of autonomous machines. As we increasingly delegate decision-making to AI systems, we must navigate the complex and evolving ethical terrain they inhabit. This article explores the ethical challenges posed by autonomous AI, the consequences of our choices, and the strategies to ensure that AI aligns with our moral values.

Understanding Autonomous AI

Before delving into the ethical aspects, it’s crucial to define what we mean by autonomous AI. Autonomous AI refers to artificial intelligence systems that can operate and make decisions independently, without human intervention, based on their programming and the data they have been trained on. These systems can be found in various domains, from self-driving cars and medical diagnosis to recommendation algorithms on social media platforms.

The Ethical Dilemmas Bias and Fairness

One of the foremost ethical challenges with autonomous AI is bias. AI systems learn from historical data, and if that data contains biases, the AI can perpetuate and even exacerbate these biases. For example, facial recognition technology has been criticized for being less accurate for people with darker skin tones, leading to discriminatory outcomes.

Addressing this issue involves both technological and ethical considerations. Developers must strive to create AI systems that are as unbiased as possible, and society must establish guidelines and regulations to ensure fairness in AI applications. This challenge emphasizes the need for diverse and inclusive teams in AI development to identify and rectify biases.

Accountability and Responsibility

As AI systems become more autonomous, questions about accountability and responsibility become increasingly complex. When an autonomous vehicle causes an accident, who is to blame—the vehicle owner, the software developer, or the AI system itself? Determining responsibility in such cases can be challenging, and it raises legal, ethical, and insurance-related questions.

To address this dilemma, there’s a need for clear legal frameworks and ethical guidelines that specify who is responsible for AI decisions. Additionally, the development of mechanisms that enable the tracing and auditing of AI decision-making processes can help ensure accountability.

Transparency and Explainability

AI algorithms can be incredibly complex, making it difficult to understand how they arrive at particular decisions. This lack of transparency can erode trust in AI systems, especially when they are applied in critical domains like healthcare or finance. People have a right to know why AI made a specific decision that affects their lives.

Efforts are underway to develop techniques for making AI systems more transparent and explainable. Researchers are working on creating “AI explainability” tools that can provide insights into the reasoning behind AI decisions. These tools are essential for not only ensuring accountability but also for building trust in AI.

Privacy and Data Usage

Autonomous AI systems often rely on vast amounts of data, much of which is personal and sensitive. The ethical dilemma here is how to balance the potential benefits of using this data with the need to protect individuals’ privacy. For instance, AI-powered surveillance systems can enhance security but may also infringe on privacy rights.

To navigate this dilemma, there must be strict regulations and consent mechanisms governing the collection and use of personal data. Transparency in data practices and robust data protection laws are essential to protect individuals’ privacy while harnessing the power of AI.

Job Displacement and Economic Inequality

The automation of tasks through AI and robotics has the potential to disrupt job markets and exacerbate economic inequalities. While AI can create new jobs, it may also eliminate many existing ones, particularly those that involve routine, repetitive tasks.

Society must address this challenge by preparing the workforce for the AI-driven future through education and retraining programs. Additionally, policymakers must consider strategies such as universal basic income to mitigate the potential economic inequalities that AI could bring.

Strategies for Navigating the Moral Terrain

Ethical AI Development

Developers and organizations must prioritize ethical considerations from the outset of AI development. This involves rigorous testing for bias, transparency in decision-making processes, and mechanisms for user consent and control. Ethical AI development should be a collaborative effort involving not only engineers but also ethicists, social scientists, and diverse stakeholders.

Regulatory Frameworks

Governments and regulatory bodies play a vital role in shaping the ethical landscape of autonomous AI. They should establish clear guidelines and regulations that ensure fairness, accountability, transparency, and data privacy in AI applications. These regulations should evolve as technology advances and ethical standards evolve.

Education and Awareness

Raising public awareness about AI and its ethical implications is essential. Education programs and initiatives should teach people about the ethical considerations surrounding AI, empowering them to make informed decisions about its use and influence.

Ethical AI Auditing

To ensure accountability, independent audits of AI systems may become a standard practice. Similar to financial audits, these audits would assess the ethical alignment of AI systems and their decision-making processes.

Interdisciplinary Collaboration

Ethical challenges in AI are multifaceted and require input from diverse fields. Collaboration between technologists, ethicists, policymakers, lawyers, and sociologists is essential to develop holistic solutions to the ethical dilemmas posed by autonomous AI.

Conclusion

The ethical dilemmas of autonomous AI are complex and multifaceted, but they are not insurmountable. By prioritizing fairness, accountability, transparency, and privacy in AI development, and by establishing clear regulatory frameworks, we can navigate the moral terrain of autonomous machines more effectively.

AI has the potential to bring about significant positive change in society, but it also carries risks. Our ability to harness AI’s benefits while mitigating its ethical challenges will determine how successfully we navigate this transformative technological landscape. It is a responsibility that we, as a society, cannot afford to neglect.